If you ever needed to analyse big csv files in your laptop you probaly faced this issue before. You access a server, cd your way to the file you want to analyse, only to find it to be tens of gigabytes big.

Not only it will take a lifetime to download the thing, but you also run the risk of finding out, after the fact, that you have the wrong one. Bummer…

If you have access to data tools like PySpark and resources to run processes remotely, for example, things can be easier. But if resources are short and you need to quiclky go through data to find what you need so you can analyse it further, I got your back.

In this shot article I’ll show you some tips and tricks to find what you need and filter down the content to just what you’re looking for.

The dataset

In order to aproximate this example to a real life scenario, we can use a real dataset. In this case, I’ll be using the Large Car Dataset from Kaggle, which anyone can download and try out the code by yourself (you can find it in this link).

The dataset is 5.36 GB big, but can be zipped to aroun 850 MB. Still something I want to avoid downloading, if I can. It is comprised of 156 different columns and around 2M rows.

The example

Imagine that this dataset is aquired periodically, is transformed, and then sent to an application to be served for its final purpose. In this case, let’s say it’s an application to show the used cars that were available at one point so enthusiast can find the car of their dream so they can buy it and see if they are available or not.

If there are cars missing from the application, for whatever reason, one way of understanding where the issue is, might be to look at the source data, in this case, is the dataset.

Problem statement

The sedan cars are not showing in the application from 2010 and they cannot be found in the database for some reason. How do you ensure that the data is in the source or not?

Other points to consider:

- I know the dataset is big, but not sure how big it is

- My work computer is ok, but is not a massive data munching machine

- My internet connection is not the greates, so depending on the size of the file, it may take a while…. And my boss is breathing down my neck to get a solution

Solution

Let’s break the problem in smaller chunks… and the data as well, for that matter. The idea here is that we understand if the file has the information we are looking for and that we are able to donwload a subset of the records in the file for further analysis.

Finding the file

Log in the remote server that hosts the data in question. I know it’s a csv file and it’s name is large car something. Here’s the how to find it:

find / -type f -name "*large*car*.csv"

The structure of the code goes like this:

- /: the starting directory that you want to search. Using a slash will run the find command in all computer. If you know which directory it should be at, more or less, you can use include it here (i.e. /home/username/data)

- -type f: What type of object are you looking for? Use f for file and d for directory

- -name: if you want to specify that the content you are looking for is in the name of the file. If you don’t specify this, you’ll probably end up with results looking into the content of the file as well as the file name, which is not what we want.

- “*large*car*\.csv”: this is the structure that “find” will use to locate the file. The “*” means that you can have zero or more characters first, then you have the word “large”, zero or more characters (“*” again), the word car, zero or more characters again, a dot (which is escaped by the back slash, otherwise it will consider the dot as a metacharacter for any character) and csv.

This will most likelly show a bunch of messages ending in “Permission denied” or “Operation not permitted”, which is a bit in the way and doesn’t add a lot of value.

find: /Library/Trial: Operation not permitted

find: /System/Library/DirectoryServices/DefaultLocalDB/Default: Permission denied

This happens because the function find will output errors and warnings into the terminal as well as valid results. So we can avoid that by redirecting the error output to null. You can do this with the code below:

find / -type f -name "*large*car*\.csv" 2>/dev/null

You should get something like my example below:

/Users/username/dataset/large car dataset.csv

With the result, you can “cd” to thedirectory where the file is.

Taking a peek at the file

In order to see if the file has the content you want, you can use “head” to see part of the content, before manipulating it, just to make sure it is the file you want. Head shows the first 10 lines of a file, by default and is a great tool to use in case of our csv file. I think it would be ok to use the first 3 just to know what the header looks like and the first 2 items. I don’t need more than that.

head -n 3 "large car dataset.csv"

The result should be something like this:

vin,stockNum,firstSeen,lastSeen,msrp,askPrice,mileage,isNew,color,interiorColor,brandName,modelName,dealerID,vf_ABS,vf_ActiveSafetySysNote,vf_AdaptiveCruiseControl,vf_AdaptiveDrivingBeam,vf_AdaptiveHeadlights,vf_AdditionalErrorText,vf_AirBagLocCurtain,vf_AirBagLocFront,vf_AirBagLocKnee,vf_AirBagLocSeatCushion,vf_AirBagLocSide,vf_AutoReverseSystem,vf_AutomaticPedestrianAlertingSound,vf_AxleConfiguration,vf_Axles,vf_BasePrice,vf_BatteryA,vf_BatteryA_to,vf_BatteryCells,vf_BatteryInfo,vf_BatteryKWh,vf_BatteryKWh_to,vf_BatteryModules,vf_BatteryPacks,vf_BatteryType,vf_BatteryV,vf_BatteryV_to,vf_BedLengthIN,vf_BedType,vf_BlindSpotMon,vf_BodyCabType,vf_BodyClass,vf_BrakeSystemDesc,vf_BrakeSystemType,vf_BusFloorConfigType,vf_BusLength,vf_BusType,vf_CAN_AACN,vf_CIB,vf_CashForClunkers,vf_ChargerLevel,vf_ChargerPowerKW,vf_CoolingType,vf_CurbWeightLB,vf_CustomMotorcycleType,vf_DaytimeRunningLight,vf_DestinationMarket,vf_DisplacementCC,vf_DisplacementCI,vf_DisplacementL,vf_Doors,vf_DriveType,vf_DriverAssist,vf_DynamicBrakeSupport,vf_EDR,vf_ESC,vf_EVDriveUnit,vf_ElectrificationLevel,vf_EngineConfiguration,vf_EngineCycles,vf_EngineCylinders,vf_EngineHP,vf_EngineHP_to,vf_EngineKW,vf_EngineManufacturer,vf_EngineModel,vf_EntertainmentSystem,vf_ForwardCollisionWarning,vf_FuelInjectionType,vf_FuelTypePrimary,vf_FuelTypeSecondary,vf_GCWR,vf_GCWR_to,vf_GVWR,vf_GVWR_to,vf_KeylessIgnition,vf_LaneDepartureWarning,vf_LaneKeepSystem,vf_LowerBeamHeadlampLightSource,vf_Make,vf_MakeID,vf_Manufacturer,vf_ManufacturerId,vf_Model,vf_ModelID,vf_ModelYear,vf_MotorcycleChassisType,vf_MotorcycleSuspensionType,vf_NCSABodyType,vf_NCSAMake,vf_NCSAMapExcApprovedBy,vf_NCSAMapExcApprovedOn,vf_NCSAMappingException,vf_NCSAModel,vf_NCSANote,vf_Note,vf_OtherBusInfo,vf_OtherEngineInfo,vf_OtherMotorcycleInfo,vf_OtherRestraintSystemInfo,vf_OtherTrailerInfo,vf_ParkAssist,vf_PedestrianAutomaticEmergencyBraking,vf_PlantCity,vf_PlantCompanyName,vf_PlantCountry,vf_PlantState,vf_PossibleValues,vf_Pretensioner,vf_RearCrossTrafficAlert,vf_RearVisibilitySystem,vf_SAEAutomationLevel,vf_SAEAutomationLevel_to,vf_SeatBeltsAll,vf_SeatRows,vf_Seats,vf_SemiautomaticHeadlampBeamSwitching,vf_Series,vf_Series2,vf_SteeringLocation,vf_SuggestedVIN,vf_TPMS,vf_TopSpeedMPH,vf_TrackWidth,vf_TractionControl,vf_TrailerBodyType,vf_TrailerLength,vf_TrailerType,vf_TransmissionSpeeds,vf_TransmissionStyle,vf_Trim,vf_Trim2,vf_Turbo,vf_VIN,vf_ValveTrainDesign,vf_VehicleType,vf_WheelBaseLong,vf_WheelBaseShort,vf_WheelBaseType,vf_WheelSizeFront,vf_WheelSizeRear,vf_Wheels,vf_Windows

abc5f0360059cf7b6fa8368db57f220ab0d87d582bf6ce3af0571c7d48b759ca,11701A,2019-05-06,2019-05-06,1498,1498,0,False,Gray,N/A,MITSUBISHI,Eclipse Spyder,7514,,,,,,,,1st Row (Driver & Passenger),,,,,,,,,,,,,,,,,,,,,Not Applicable,,Not Applicable,Convertible/Cabriolet,,,Not Applicable,,Not Applicable,,,,,,,,Not Applicable,,,3000.0,183.07123228419,3.0,2,,,,,,,,,,,,,,,,,,Multipoint Fuel Injection (MPFI),,,,,,,,,,,MITSUBISHI,481,MITSUBISHI MOTORS NORTH AMERICA,1054,Eclipse Spyder,2321,2002,Not Applicable,Not Applicable,,,,,,,,,,MPI,,,,,,BLOOMINGTON-NORMAL,,UNITED STATES (USA),ILLINOIS,,,,,,,Manual,,,,SPORTS,,,,,,,,Not Applicable,,Not Applicable,,,,,,abc5f0360059cf7b6fa8368db57f220ab0d87d582bf6ce3af0571c7d48b759ca,,PASSENGER CAR,,,,,,,

e24402cc77f6fd2d6a9dd626ad92795b717814340f1ab17a2cea3ba34e629538,9055B,2019-05-06,2019-05-06,10589,10589,0,False,Super Black,N/A,NISSAN,Altima,7514,,,,,,,1st & 2nd Rows,1st Row (Driver & Passenger),,,1st Row (Driver & Passenger),,,,,,,,,,,,,,,,,,Not Applicable,,Not Applicable,Sedan/Saloon,,,Not Applicable,,Not Applicable,,,,,,,,Not Applicable,,,2500.0,152.55936023683,2.5,4,4x2,,,,,,,In-Line,,4,,,,,,,,,Gasoline,,,,,,,,,,NISSAN,478,NISSAN NORTH AMERICA INC,997,Altima,1904,2016,Not Applicable,Not Applicable,,,,,,,,position 6:Model change number code,,,,2nd row outboard and center 3-point manual belts,,,,CANTON,Nissan North America Inc.,UNITED STATES (USA),MISSISSIPPI,,,,,,,Manual,,,,,,,,Direct,,,,Not Applicable,,Not Applicable,,,,,,e24402cc77f6fd2d6a9dd626ad92795b717814340f1ab17a2cea3ba34e629538,,PASSENGER CAR,,,,,,,

Although it is far from ideal, but I can see that it has the information I need.

Finding the relavant data

Now let’s see if we can find data relevant to our objective. Just as a reminder, we’re looking for sedans in 2010. I’ll use grep to do that.

Grep will output any line that has the information we want. Because CSV has all information for each car in a single line, it will be perfect for our usecase.

Looking into our dataset, we can see that the dates (firstSeen and lastSeen) are shown before the type of car. So I can use this to make sure the inforation is there before any further analysis.

In order to filter down to what I want decreasing the number of false positives, I can once again use regex as my friend.

grep -i "2010.*sedan" large\ car\ dataset.csv

And then I can understand the size of the data in number of lines using wc (word count) with the -l argument, which would count the lines. Combining the two we would get the following statement returning 5.333 lines, which even Excel could work with.

grep -i "2010.*sedan" large\ car\ dataset.csv | wc -l

Preparing the file

Now that we know were the file is at and that we’re able to filter down the data to a subset of interest, we can move to create a new file with targeted data. We do this in two parts. First, we create a new file with the first line of the csv file so we have the headers present.

head -n 1 large\ car\ dataset.csv > large_car_sedan_2010.csv

Next, we append the result of our grep command to the created file:

grep -i "2010.*sedan" large\ car\ dataset.csv >> large_car_sedan_2010.csv

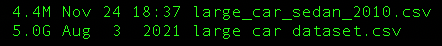

So if we see the size of the file, it has come down from 5.0 GB to a mearly 4.4 MB.

This can ealily be copied to your computer using scp or you can also compress it using zip, tar or some other tool of your choosing.

Conclusion

If you need to work on a CSV file that is too big to download, or you have limited resources/tools to do this remotely or locally, it may be a good idea to work with a subset of the content. This article sums up some techniques you can use in such cases.

But how about you? Are there any other tools that you use in such scenarios? Anything you recomend or would add?

In any case, I hope has helped in some way.

Have a great one!

Leave a comment